In neural networks and artificial intelligence, activation functions are critical. They are mathematical functions applied to each neuron’s output in a neural network to transform it into the desired output range.

Types of Activation Functions

There are several types of activation functions, but we will focus on the most commonly used ones.

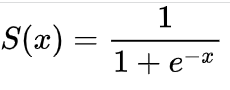

Sigmoid Function

The sigmoid function is a popular activation function that produces an output in the 0 to 1 range. It is especially beneficial for binary classification problems. The sigmoid function is defined as:

where x is the input to the function.

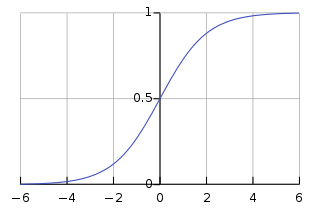

ReLU Function

The ReLU (Rectified Linear Unit) function is another commonly used activation function. It is particularly useful for deep neural networks. The ReLU function is defined as:

ReLU(x)=max(0,x)

where x is the input to the function.

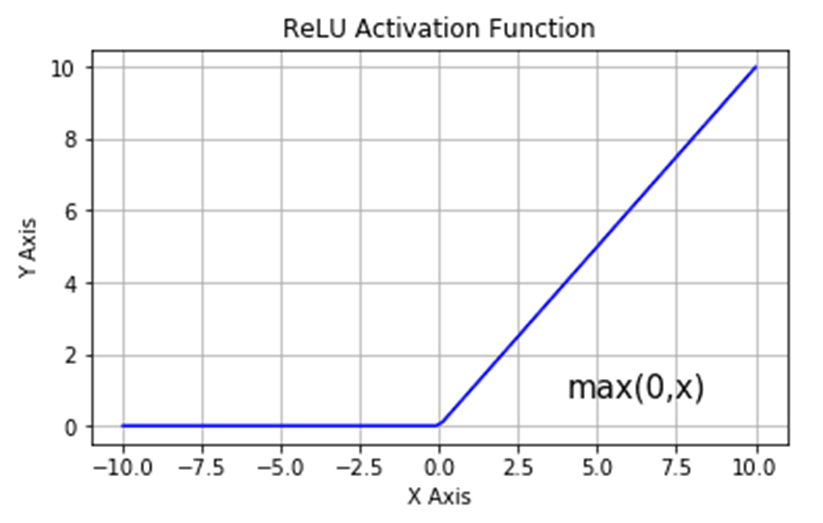

Softmax Function

The Softmax function is used in multi-class classification problems, where the output can belong to one of several classes. It normalizes the output so that it adds up to 1. The Softmax function is defined as:

where z is the input to the function.

where z is the input to the function.

Examples of Activation Functions

Image Classification

The input to the neural network in image classification is an image, and the output is a label that describes the image. The ReLU activation function is frequently used in the network’s hidden layers in this application, while the Softmax activation function is used in the output layer to generate the probability distribution over the possible labels.

Sentiment Analysis

The input in sentiment analysis is a piece of text, and the output is a classification of the text’s sentiment (positive, negative, or neutral). The sigmoid activation function is frequently used in the output layer of this application to generate a probability distribution over the possible sentiment labels.

Python Example of an Activation Function

Here is an example of how the ReLU activation function works in Python:

def relu(x):

return max(0, x)

# Test the ReLU function with some sample inputs

print(relu(2)) # Output: 2

print(relu(-2)) # Output: 0

In this example, the relu() function takes an input x and returns the ReLU output, which is the maximum of 0 and x. When x is positive, the output is the same as x, while when x is negative, the output is 0.

Conclusion

Activation functions are an essential component of neural networks and play a critical role in AI model accuracy. In this blog post, we discussed the various types of activation functions and provided examples of how they can be used in AI applications. AI practitioners can improve their models and achieve better performance by understanding activation functions.

References

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. Aggarwal, C. C. (2018). Neural Networks and Deep Learning: A Textbook. Springer.

- Nielsen, M. (2015). Neural Networks and Deep Learning. Determination Press.

- PyTorch documentation: Activation Functions. (2021). Retrieved from https://pytorch.org/docs/stable/nn.functional.html#non-linear-activations-weighted-sum-nonlinearity.